This code shows how to calculate the loss of a single training sample when the last layer in your neural network apllies a sigmoid function to create a binary classification.

If you are here just for the code, grab this and strip the comments:

def log_loss(act, target):

""" target == 0 -> -math.log(1 - act)

target == 1 -> -math.log(act) """

return -target * math.log(act) - (1 - target) * math.log(1 - act)

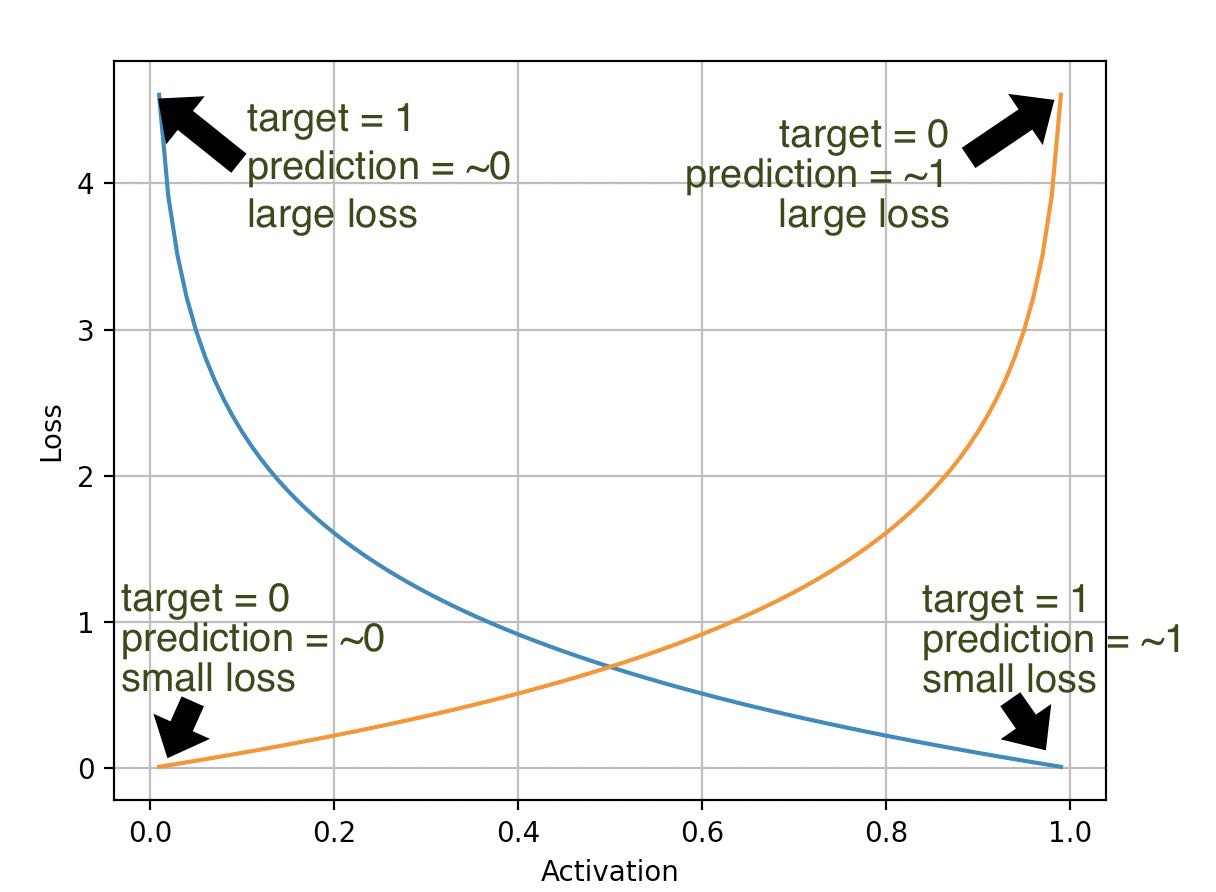

The goal of the logistic loss function is:

This is what that behavior looks like:

If you want a full example that shows the graphic, take this code:

import math

import matplotlib.pyplot as plt

def log_loss(act, target):

""" target == 0 -> -math.log(1 - act)

target == 1 -> -math.log(act) """

return -target * math.log(act) - (1 - target) * math.log(1 - act)

fig, ax = plt.subplots()

predictions = [r / 100 for r in range(1, 100)] # -> [.01, 0.02 ... 0.99]

losses_for_target_one = [log_loss(p, 1) for p in predictions]

losses_for_target_zero = [log_loss(p, 0) for p in predictions]

ax.plot(predictions, losses_for_target_one)

ax.plot(predictions, losses_for_target_zero)

ax.set_xlabel("Activation")

ax.set_ylabel("Loss")

plt.grid(True)

plt.show()